Learn Python

Learn Data Structure & Algorithm

Learn Numpy

Learn Pandas

Learn Matplotlib

Learn Seaborn

Learn Statistics

Learn Math

Learn MATLAB

Learn Machine learning

Learn Github

Learn OpenCV

Introduction

Setup

ANN

Working process ANN

Propagation

Bias parameter

Activation function

Loss function

Overfitting and Underfitting

Optimization function

Chain rule

Minima

Gradient problem

Weight initialization

Dropout

ANN Regression Exercise

ANN Classification Exercise

Hyper parameter tuning

CNN

CNN basics

Convolution

Padding

Pooling

Data argumentation

Flattening

Create Custom Dataset

Binary Classification Exercise

Multiclass Classification Exercise

Transfer learning

Transfer model Basic template

RNN

How RNN works

LSTM

Bidirectional RNN

Sequence to sequence

Attention model

Transformer model

Bag of words

Tokenization & Stop words

Stemming & Lemmatization

TF-IDF

N-Gram

Word embedding

Normalization

Pos tagging

Parser

semantic analysis

Regular expression

Learn MySQL

Learn MongoDB

Learn Web scraping

Learn Excel

Learn Power BI

Learn Tableau

Learn Docker

Learn Hadoop

Deep learning bidirectional in recurrent neural network

What is Bidirectional rnn and why we use it?

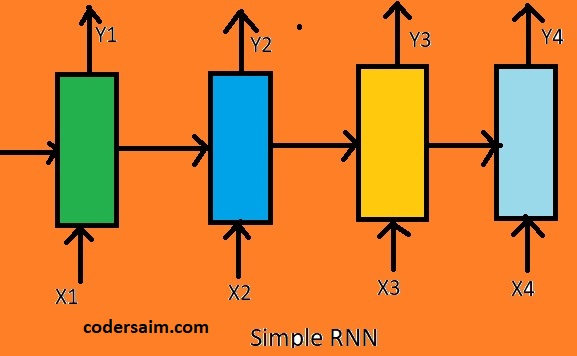

In diagram Y3 output depends on X3, X2, X1 inputs. For Y3 output we take X3 as current input and take X2, X1 from the second cell output or previous output. So here we can say that Y3 output depends on previous output or value. What will happen if Y3 output depends on X4 or future value/output. If this happens then we can't use basic flow or architecture to solve this type of problem. Because in RNN we can only use previous output or value to solve a problem. We can't use future words. RNN basic flow or architecture is not created to solve this type of problem. To solve this type of problem we use the bidirectional RNN technique.

How Bidirectional RNN works?

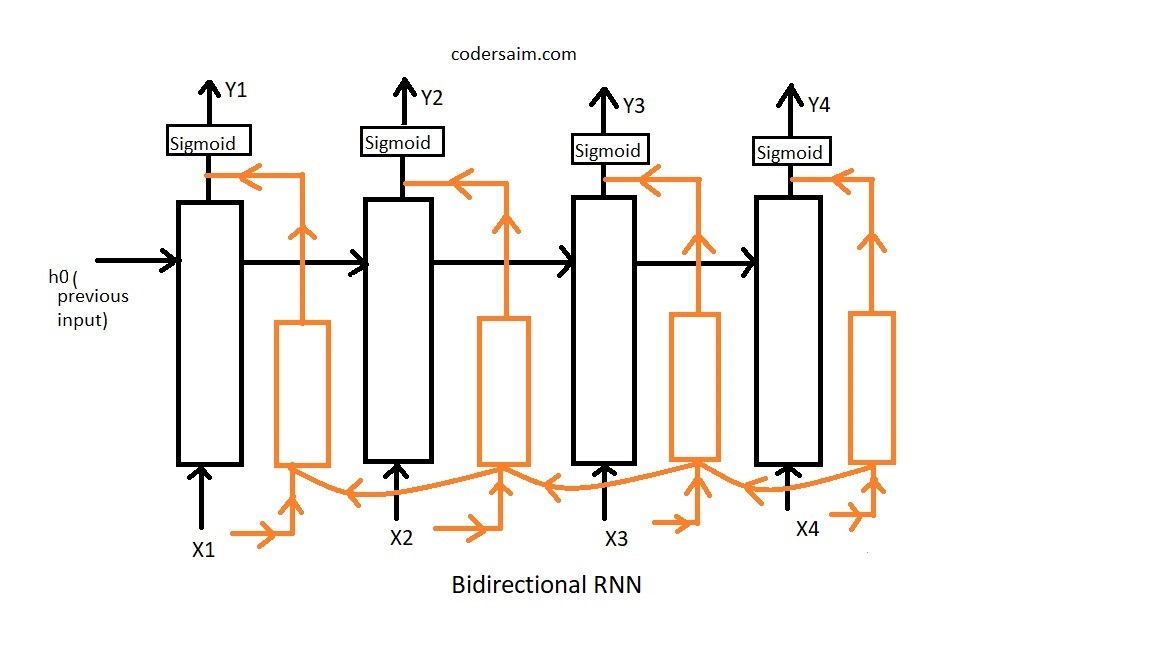

In the image, we can see an orange color neurons layer between the black neurons layer. The black neurons

layer is a normal or basic or simple RNN layer. The orange layer is also same as the black layer but here the

working process is in the opposite direction of the black layer. We can say that the orange neurons layer is a

backward layer RNN. In the orange neurons layer working is same as black neurons layer but here we just change

the direction of the information. In normal RNN our first neuron information pass to the second neuron then

the second neuron information pass to the third neuron and the third neuron information pass to the number

four neuron. Here our black color neurons layer works like this. But the orange layer, pass information to

other neurons in the backward direction. It means number 4 neuron pass information to number three neuron and

number three neuron pass the information to the number two neuron and then number one. Here current input(X1,

X2, X3, X4) is the same for both black and orange color neurons layers. We combine the output of black and

orange color neurons and then run a sigmoid activation function and then we get the final output.

Now there can be a question is that why we need backward layer(orange color neuron layer)?

We know that if our one output depends on future value or output then we use bidirectional RNN because simple

RNN can't work with future output. Suppose in the diagram Y3 output is depend on X4.

Now how do we will get the X4?

Look one thing, number four orange color neuron getting the input from the black color number four neuron. So

we can say that number four orange color neuron has value X4 and number four orange neuron is connected with

number three orange color neuron. For number three orange color neuron current input is X3 and the previous

input is X4. The output that we get from the number three orange color neuron is the output of X3 and X4

values.

After getting the output from the both third orange and black color neuron, we combine black color number

three and orange color number three neurons outputs and then we run a sigmoid activation function and get the

final output.

What was our problem?

Our problem was that we need X4 to get Y3 output

from number three black color neuron and to get the final output we have to combine number three black and

orange color neuron and the output that get we from number three orange color cell is X4 value. So we can say

that if number three orange color cell have X4 value and to get final output if we combine black and orange

color cell output then we using X4 value to get Y3 output. So this way we use the future value in RNN.